AI Messaging Loses in Tests (But Brands Use it Anyway)

Looking at AI trends and best practices is tricky. The industry is evolving at such a velocity that what applies today may be materially different by 2026.

Still, as dynamic brands, we have to adapt and maximize value at each stage of the game.

With this in mind, my research team dug into hundreds of AI A/B tests run on B2B SaaS websites, detected with our patented technology at DoWhatWorks, and came across some notable trends.

#1 Brands ditch AI-messaging in their headers

In 2023, we saw a surge of SaaS brands adding anything “AI-Powered” or “AI-driven” into their headlines and site copy. A quick use of Wayback Machine for the top 100 SaaS brands will show you how pervasive it was.

Most companies raced to add basic ChatGPT wrappers, but outside a few SaaS front runners, teams like Zapier and Intercom, the majority of AI features were limited in practical applications.

But regardless of the limitations, the optics were that if AI wasn’t integral to your roadmap and marketing, you were losing relevance.

The major venture capital firms, from Softbank, to Khosla Ventures, to Andreessen Horowitz, shifted their investments almost entirely into AI startups.

As this flood of AI messaging hit consumers, a lot of buyers quickly became numb to it.

Especially in 2025 when many of the AI promises are simply baseline expectations.

Buyers want to know what the product actually does. What is the value proposition?

We see brands like Notion, which ran extensive tests, looking at

- AI in the hero headline

- AI in the hero headline and hero subheader

- AI just in the hero subheader

Just in the subheader won out.

They kept the focus on the core value proposition of helping teams write, plan and collaborate to create better workspaces, while still having the AI inclusion.

This trend of AI reinforcing and amplifying the core UVP (unique value proposition) is gaining momentum.

We see this shift to no AI in the hero header (but including it in the subheader) across dozens of top SaaS brands: Klaviyo, Monday.com, Freshworks, MongoDB, Snowflake, Braze, Cloudflare, GitLab, ActiveCampaign and countless others.

Another cohort of SaaS are simply removing AI verbiage all together, from both the hero header and subheader.

Perpetua, featured below, is an example of this. Even brands with AI as a key part of their product often find that top-level messaging with AI that obscures the core value prop or reduces clarity, leads to suboptimal results.

#2 AI Feature blocks win when they are aligned with the core objective of the page

A fundamental best practice in the world of CRO (Conversion Rate Optimization) is doing optimization through the lens of a UVS (Unified Variable Set).

A Unified Variable Set means that when multiple variables are being tested, all changes should ladder up to address a single focused objective.

Monday.com was one brand who built with this UVS in mind and effectively incorporated in AI feature blocks in a way that amplified their objective of blocks that highlighted deal progression.

The A/B test they ran kept the Monday AI block and got rid of the customizable block.

GoDaddy also was working through an optimization project, trying to paint a more clear picture of their simplicity, and by using AI imagery and verbiage in their feature blocks, they helped to paint a clear picture of that.

In their case, the losing version of the A/B test was just focused on templates, while the winner tied to their UVS of simplicity by showing the process more concretely.

Some brands like Intercom are so AI-centric, that their UVS is based around being the premier AI-first ticketing and helpdesk tool, and in those cases, you see that reflected across nearly all their feature blocks and winning tests.

Fundamentally, AI verbiage in feature blocks should be able to enhance the core value proposition you want to get across to users.

#3 Are brands running with losing tests?

Here is where the research gets really interesting.

A handful of brands, like GoDaddy, ran a test on AI verbiage in the hero header less than a year ago, and the less AI-centric version won out.

But fast forward to today and it’s all about AI in the header, subhead, everywhere…

While we can track what version of tests win in an A/B test, we have no visibility on “implementations” or when a brand simply decides to change a page without A/B testing.

Other brands like Talkdesk that tested out of AI positioning in their header, also followed the same trend and today their homepage is…

What is happening here?

First, the clear disclosure is.. We don’t know.

We can only operate from our research and the tests that we detect and monitor.

The most likely reasons, I would speculate, are the following…

- The pressure from boards/investors/executives can be strong. Brands want to be seen as AI-first, AI-native and especially when they are launching new AI features, they want to highlight them.

- AI is a rapidly evolving space. What may have been ineffective in 2024 (potentially when the AI product line was less developed) may have shifted as AI becomes a more integral part of the product.

- SaaS products can have wide variance in how integral AI is to the core functionality. If the main part of your value proposition, in say Talkdesk’s case, is the agentic AI aspects, it might make sense to make that a focal point.

Ultimately, how brands talk about and position their AI will continue to evolve.

So many factors go into what makes for a successful test, from the traffic sources a brand leans into, to the competitive landscape, to the evolution of the field overall (2024 was the year of LLMs, 2025 being the year of agentic AI).

What we can share with you from DoWhatWorks is what A/B tests brands run and then the winners across thousands of tests, showing which versions brands decide to keep (decided only after brands keep a winning version for 3+ months)

What’s Next?

The way brands package, position and sell AI features is rapidly changing.

At first many brands simply added AI to their higher-tier plans.

At the time, this model worked.

AI usage was relatively low on a per-user basis, largely because users were experimenting or using AI sporadically. Most companies used pay-per-seat, flat-rate, or feature-gated pricing, confident that the incremental cost of AI usage would be covered by the higher-tier subscription fees.

But vendors underestimated how quickly AI usage would scale and how expensive it would become. As AI grew from novelty to necessity, usage patterns changed dramatically.

Instead of users running a few AI queries per day, power users began generating hundreds or even thousands of AI tasks daily, whether generating marketing copy, analyzing data, or automating customer support.

This surge in usage exposed the underlying cost structure, as most AI providers rely on third-party models like OpenAI, Anthropic, or Google, which charge on a per-token or compute-usage basis.

Suddenly, what was bundled as a “value-add” started becoming a margin-eater.

Today, brands are actively exploring alternative AI monetization models. The most common shift is toward usage-based pricing, charging users directly for AI consumption, either by the number of queries, tokens, or credits.

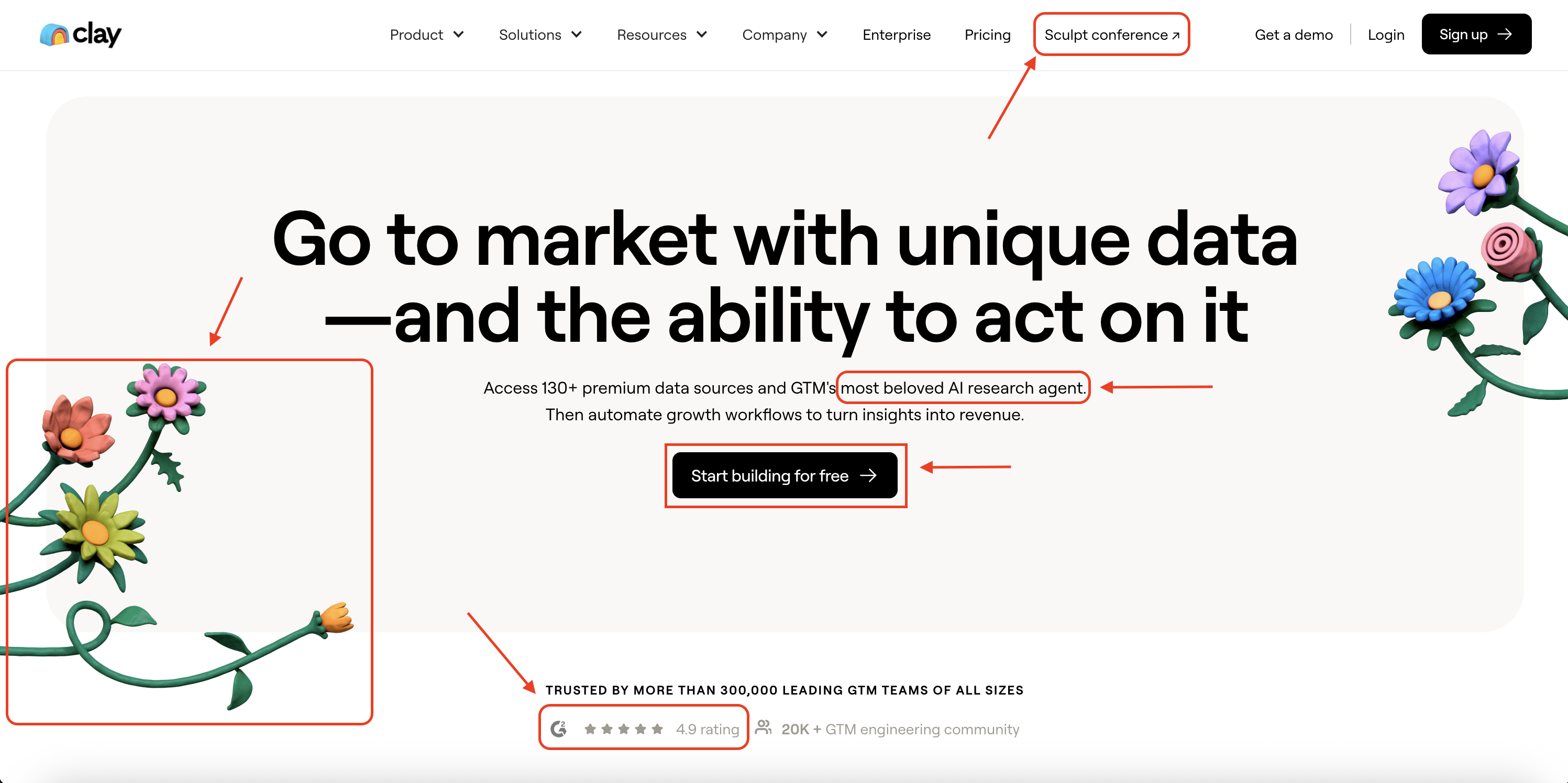

You see brands like Clay (charging on credits) and Intercom (charging by resolution) focusing on usage-based pricing.

Another emerging approach is offering AI as an explicit add-on, separate from the core subscription, so users pay proportionally to their AI usage. Some companies are experimenting with hybrid models, offering a baseline amount of AI usage included with plans but charging extra for heavy usage.

This transition is still playing out, but the direction is clear: as AI has moved from an experimental feature to a core utility, brands are being forced to align pricing more closely to real usage and infrastructure costs, while balancing customer willingness to pay.

I look forward to tracking this trend over the next few quarters and sharing what tests brands are running and what is winning and losing..